Abstract: NATO’s mission is the protection of its member states’ territorial sovereignty, political security, and stability. Developments in geo- and military-strategic scopes are insufficient to achieve this aim ultimately. The future war increasingly takes place in cyber and information space to undermine the worldview and integrity of the general population and military personnel through digital and psychological interventions. To stabilise the resilience of citizens and, thereby, the democratic resilience of states in times of digital information diversity, the ability to select, assess, evaluate, and use information in a goal-oriented manner is crucial. The psychological construct of Critical Thinking (CT) comprises these vital abilities. Due to the importance of efficiently dealing with information for military personnel, NATO has developed a Critical Thinking training course to increase this ability in an intervention-based manner. In this experimental study, we examine the effect of the Critical Thinking course to determine whether one’s Critical Thinking ability can be increased in general and whether NATO’s course is an effective intervention. A pilot experimental online study was conducted with n = 85 subjects (intervention group vs. control group) and a correlational study with n = 122 subjects. Surprisingly, comparing the experimental and control group indicated that processing the pre-test and post-test alone improved Critical Thinking skills within the subjects. However, there was also a trend of interaction between group and time, indicating the positive influence of the Critical Thinking course. The analysis of demographic influences only indicated residency as a significant factor regarding Critical Thinking ability. The results of this study indicate that Critical Thinking ability is increasable. Future studies should examine which interventional aspects are most effective in raising the Critical Thinking ability to construct evidence-based efficient Critical Thinking courses for the military and the general population.

Problem statement: Does NATO’s self-designed Critical Thinking course show its effect, and are there factors influencing Critical Thinking?

Bottom-line-up-front: Critical Thinking can be increased through an online intervention.

So what?: This study shows that Critical Thinking—which is associated with improved information handling, planning, decision-making skills, and moral resilience—is a changeable skill. Therefore, military and civil institutions should explore the construct in more depth and, based on the results, develop effective interventions to train their personnel, especially their leaders.

Source: shutterstock.com/metamorworks

The Power of Critical Thinking

[…] As excellent as our cognitive systems are, in the modern world we must know when to discount them and turn our reasoning over to instruments — the tools of logic, probability, and Critical Thinking that extend our powers of reason beyond what nature gave us. Because in the twenty-first century, when we think by the seat of our pants, every correction can make things worse, and can send our democracy into a graveyard spiral[1].

The quote above is how Steven Pinker, an American psychology professor at Harvard University, describes the importance of Critical Thinking (CT) to society. Pinker explains that one should not rely solely on the given cognitive abilities and that the power of CT must be used to ensure the security of society. Due to the ever-changing circumstances of the present, CT has become a vital skill in today’s world. CT skills are critical in making well-informed, accurate, and conscious decisions. CT is a crucial skill in order to evaluate information, form hypotheses, enhance problem-solving, and make better decisions. Thus, the development of CT is a critical skill and one that is necessary to meet the ever-increasing demands of 21st-century life and professions.[2]

Due to the ever-changing circumstances of the present, CT has become a vital skill in today’s world. CT skills are critical in making well-informed, accurate, and conscious decisions.

Critical Thinking skills are increasingly necessary for education, especially for leaders.[3] Indeed, many nations, academic institutions, and organisations contend that CT skills facilitate an individual’s ability to adapt rapidly to environmental changes.[4] CT is one of the top three essential skills cited as an antidote to misinformation.[5] Nations recognise the increased need for developing CT skills that challenge the current “fake news” environment as it impacts societies and global security.

The Definition and Nomological Network of Critical Thinking

There is a wide range of various approaches and definitions related to the concept of CT. Therefore, it is a challenge to reach a consensus on one definition. For example, Dwyer et al. describe CT as a metacognitive process which consists of skills (e.g., analysis and evaluation) that, when applied, increase the likelihood of a logically correct conclusion to an argument or solution to a problem.[6]

After approximately 40 years of research on this topic, CT is defined as follows:

CT is the intellectually disciplined process of actively and skillfully conceptualising, applying, analysing, synthesising, and/or evaluating information gained or generated through observation, experience, reflection, reasoning, or communication as a guide to belief and action. CT is the process of analysing, evaluating, drawing conclusions, and interpreting resources and activities.[7]

However, there are intersections on which each definition of CT agrees, and this consensus is that CT consists of both skills and dispositions and includes affective and cognitive domains.[8], [9] Due to the ambiguity of CT, triangulation with other constructs is essential to gain an understanding and a psychometric lead to the construct. In particular, correlations with the ‘Big 5’ (neuroticism, extraversion, openness, conscientiousness, agreeableness), as a strongly validated scientific model, can be used to enact a construct in personality psychology. Therefore, correlations between the ‘Big 5’ and CT were examined. The dimension Openness isassociated with creativity, curiosity, and esthetic interest and correlates positively with CT (r = .40, p =.009). These correlations are unsurprising, as openness is also related to crystallised intelligence, and critical thinkers are described as curious, flexible, and open-minded.[10], [11]

Correlations with the ‘Big 5’ (neuroticism, extraversion, openness, conscientiousness, agreeableness), as a strongly validated scientific model, can be used to enact a construct in personality psychology. Therefore, correlations between the ‘Big 5’ and CT were examined.

Schön established an overarching connection between CT and metacognition, as the active control over cognitive processes, in his initial research.[12] Metacognition helps develop CT because it requires meta-level operations.[13] Both constructs represent different forms of higher-order thinking,[14] executive processes,[15] and ventures to further learning.[16] Individuals with high CT skills are also good at using metacognition.[17] Executive functions also impact CT.[18] The term executive function is a broad term that describes, among other things, a variety of higher-order cognitive functions. These let individuals decide how to behave and adapt to new situations.[19], [20]

Thinking dispositions describe the will to engage in certain thinking processes.[21], [22] This volition has implications for people’s propensity to engage in CT. Various researchers suggest that these dispositions are critical to applying CT skills.[23] Facione and Facione see truth-seeking, the need for cognition, open-mindedness, analyticity, systematicity, confidence, curiosity, and maturity as dispositions that are helpful for CT.[24] The need for cognition is considered a disposition for CT]. CT is also positively associated with emotional intelligence (r = .46, p < .01) and the integrative conflict management style (r = .47, p < .01).[25]

The Role of Critical Thinking in the Military

Military operations are complex and difficult to predict. Most international operations focus on a peace-enforcing format in regional conflicts such as Syria and Iraq.[26] These operations are accompanied by uncertainties regarding participating parties’ intentions, capabilities, and strategies. These uncertainties require well-trained commanders, officers, and staff personnel. Former US General of the Army George Marshall said that leaders must be prepared to deal with change, and unexpected difficulties so mental processes are not inhibited when atypical events occur.[27] Military leaders find themselves in situations where the rules of engagement or historical warfighting principles cannot be applied. In these situations, novel approaches to solutions, which are the result of CT, are essential to mission success. Here, soldiers must develop new systems based on evaluated information.[28], [29] Research within the US military has shown that leaders who have completed CT training have improved from the perspective of tactical command. Additionally, they demonstrate better outcomes in terms of qualitatively and quantitatively higher alternative and contingency plans,[30] better cross-cultural competence,[31] and higher moral resilience.[32] The teaching and application of CT are seen as critical components for operational commanders to implement operational objectives.[33]

Military leaders find themselves in situations where the rules of engagement or historical warfighting principles cannot be applied. In these situations, novel approaches to solutions, which are the result of CT, are essential to mission success.

NATO has also seen the relevance of this issue and, in the report on Joint Operations 2030 published in 2011, cited CT as an issue that needs more research. Senior Operational Analyst at NATO’s Allied Command Transformation (ACT) Dani Fenning describes efforts to help NATO “think differently” by working with colleagues to build new capabilities using CT.[34] NATO’s Human Factors and Medicine Panel sees CT as necessary to ensure operational agility and adaptability as well as good conduct of operations in NATO’s multinational framework despite potential cultural differences in nations and branches.[35] Therefore, training on this topic is indispensable. NATO believes that CT is a learnable skill and intends to train its personnel in the skill. Thus, the former Supreme Allied Commander Transformation (SACT), General André Lanata, tasked the NATO Innovation Hub to develop a course to improve CT. Therefore, over approximately 1.5 years, the Innovation Hub developed and evaluated a CT course, and in this study, we will examine the effects of this course on the participants’ CT ability.

Critical Thinking Interventions

In academic discussions and research, CT is divided into three perspectives. 1.) The innate trait (dispositional perspective) 2.) The cognitive development (emergent perspective), and 3.) The behavioural and skills perspective (state-perspective).

Various research findings show that CT can be improved using courses.[36], [37] Educational science and psychology researchers view CT as a skill that can be learned and improved.[38] Thus, certain sub-skills of CT, such as verbal reasoning skills, argument analysis skills, thinking as a hypothesis, decision-making, and problem-solving skills, can be learned without including biases[39] and connected based on elaboration. Again, the metacognitive aspect is highly relevant.

In 1998, Halpern designed a four-part empirically based model to teach and learn CT. Here, he divided it as follows. 1.) First, the learner should be prepared for the strenuous cognitive work; 2.) There should be instructions for teachers on the skill of CT; 3.) Training should bring structural aspects of problems and arguments closer to promote the cross-contextual transfer of CT skills, and; 4.) A learner’s metacognition should be encouraged to monitor progress.

As the CT intervention in this study, the NATO Critical Thinking course is designed according to the four-part model empirically based on Halpern to teach CT facets as effectively as possible.[39] There is a divergence of opinion as to whether CT can be learned or not.[40], [41] However, we hypothesise that CT can be increased through intervention and examine the effect of the NATO Critical Thinking course on CT ability.

Hypothesis 1: The CT course positively affects Critical Thinking ability.

Hypothesis 2: Participants who received the CT Course will outperform those who did not.

Because of the prevalence of the topic of CT in North America more than on other continents (North America vs other continents) in both military and civilian settings. It is hypothesised that subjects with a residency on this continent will exhibit significantly better CT performance than subjects from other continents.[42]

Hypothesis 3: North American residency positively impacts Critical Thinking ability.

The research findings of Abrami et al., showed that educational level has no significant effect on CT ability, so it is assumed that there is no significant difference in pre-test scores between all groups.

Hypothesis 4: Educational level does not influence Critical Thinking skills.

Based on the findings of Endsley et al., it is hypothesised that individuals in the active military achieve significantly better scores than civilian individuals because they are trained in decision-making and problem-solving strategies in situations.[43]

Hypothesis 5: Military affiliation has a positive effect on Critical Thinking skills.

Method

The course’s entire process is described below. Further, the following section describes a cross-hypothesis method involving the NATO Innovation Hub’s CT course and the acquisition of samples and materials.

The NATO Critical Thinking Course

Due to the mentioned relevance of CT for NATO, the former SACT tasked the NATO Innovation Hub in late spring 2021 to develop a CT course. This course is intended to educate both NATO civilian and military personnel in CT to increase this essential skill in a time of ever-changing circumstances.

The working group decided to execute the course and assessment using an online platform hosted on the NATO Innovation Hub website due to COVID-19, despite research showing that online classes are less successful at teaching CT than in-person meetings.[44]

The course was executed between June 1, 2022, and July 1, 2022. It consisted of an introduction, five instructor-led lessons, and a summary class. The weekly learning effort and associated tasks were designed to be between 3-5 hours.

The chosen topics for the course were 1.) Cognitive Bias and Decision-Making; 2.) Cultural biases; 3.) Logic and reasoning; 4.) Improving Critical Thinking, and; 5.) Data Analysis. The structure of each lesson was always divided into five subcategories, which corresponds to Halpern’s empirical model of learning from CT. Each class started with the 1.) Lesson outline: The lesson’s topics were introduced so the learner could understand what to expect from the course; 2.) Lesson materials consisted of the lecturer providing PowerPoint presentations (approx. 20 – 30 minutes) and videos; 3.) A quiz of 5-6 questions in multiple-choice format assessed the targeted content; 4.) Assignments, which required a transfer of knowledge and skills, and 5.) Other resources provided further resources on the lesson topic for those interested.

Approximately three days after the lessons were released, participants could attend a Q&A session (Approx. 1 hour) with the instructor. The instructors were experts in their field and diverse in knowledge, experience, and expertise. Again, the intention was to represent as diverse a spectrum of views and approaches as possible. The lecturers were free to choose how they structured and delivered their lessons.

Participants and Procedure

The sample of this study participated in the CT Assessment of the NATO Innovation Hub from June 1, 2022, to July 1, 2022, consisting of n = 156 participants for the pre-test and n = 85 participants (male = 75.3%) for the pre- and post-test. N = 40 participants with a mean age of 35.57 years ([21, 72], SD = 10.50, Md = 33.00; male = 77.5%) were in the intervention group and n = 45 participants with a mean age of 35.57 years ([21, 72], SD = 10.50, Md = 33.00; male = 73.3%) were in the control group. N = 47 participants reported having at least a master’s degree or equivalent, and n = 30 participants (35.3%) reported being active military personnel.

Participants in this study were primarily recruited through LinkedIn. A link was shared there, allowing interested participants to self-select for either having their CT skills measured or participating in the course. After successful participation in the course (completing the pre-test & post-test, all quizzes, and assignments), participants in the intervention group were awarded a certificate.

A 2×2 mixed design was used to examine the course effect (between-subject factor: course participation vs no course participation) x (within-subject factor measurement repetition: pre-test vs post-test). The pre-test and post-test were administered on the NATO Innovation Hub online platform before “Lesson 1” and after “Lesson 5,” respectively. Interested participants registered in advance of the course.

Measurement

At the beginning of the online survey, participants had to consent to a privacy statement for participation, followed by instructions and a practice explaining the platform’s functionality. The online assessment consisted of the Selected Response Questions (SRQs) as a subtest of the Collegiate Learning Assessment (CLA+). The demographic data were asked at the end of the testing. The CLA+ is an instrument for measuring CT and written communication. In its complete form, the CLA+ includes a 60-minute performance task (PT) and a 30-minute set of selected response questions (SRQs). In this study, the SRQs were used for economic reasons. The SRQ presents participants with 25 items in a multiple-choice format with four items (one being correct) and one or two documents to refer to when answering each question. The supporting documents included a range of information sources such as letters, memos, photographs, charts, and newspaper articles. SRQ composite score values can range from 400 to 1600. The SRQs consisted of three subsections: Scientific and Quantitative Reasoning (SQR), Critical Reading and Evaluation (CRE), and Critiquing an Argument (CA); they show good reliability (α = .79).

Data Analysis

Statistical data were analysed using the “SPSS” (IMB SPSS Statistics version 27). A paired t-test (a statistical test that compares the means of two samples) was calculated to examine the difference between pre-test and post-test CLA+ scores (H1). A 2-way mixed ANOVA with repeated measures was computed to explore the course’s efficacy in contrast to the control group without intervention (H2). T-tests for independent samples were used to examine CT ability differences (H3; H5). Finally, a one-way ANOVA was used to compare multiple groups regarding differences in CT ability.

Results

Hypothesis testing

Hypothesis 1predicts that participation in the CT course positively affects CT ability. The average post-test scores should therefore be higher than the average pre-test score. The paired t-test indicates a significant improvement in the score after the intervention group completed the course, t(84) = –6.02, p < .001. Thus, H1 can be accepted.

For H2, it is assumed that the assessment scores improve significantly between the two measurement points (pre-test to post-test) in the intervention group but not in the control group. A significant difference would suggest an impact of the course on CT ability. The normality precondition of the control group regarding the post-test could not be met. Nevertheless, the mixed ANOVA was calculated since a normal distribution can be assumed for an n >30.[45]

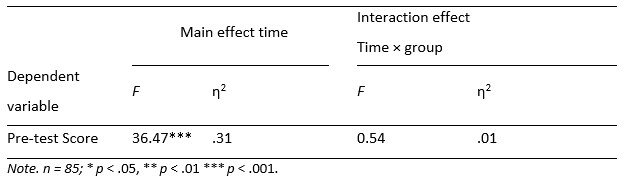

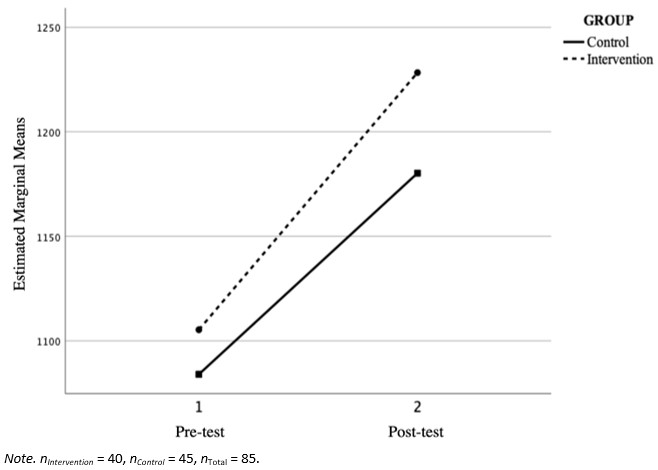

For H2, the mixed ANOVA showed no significant interaction between pre-test/ post-test (time) and group membership F(1, 83) = 0.54, p = .463, partial = .01. A significant main effect for pre-test and post-test occurred F(1, 83) = 36.47, p < .001, partial = .31, indicating that all participants improved in the pre- and post-test assessment regardless of group membership. The results of the Mixed ANOVA are shown in Table 1 and Figure 1. Thus, H2 can be rejected.

Table 1; Results of 2×2 mixed ANOVA: main effects of time and group, and interaction effect of time × group.

Figure 1; Interaction effect (time × group) for the variables’ pre-test score and post-test score.

Hypothesis 3assumes that residency influences CT ability so that individuals from North America achieve better scores than individuals from other continents. Therefore, a t-test for independent samples was calculated as the prerequisites for a t-test were met.

The calculations showed that there was a significant difference in CT ability as a function of residency, with subjects from North America (M =1163.96, SD =225.07) scoring on average about 88 points higher in the score than subjects from other continents (M =1075.71, SD =176.68), t(120) = –2.13, p = .036, CI95% [–170.455, –6.051]. Thus, H3 can be accepted.

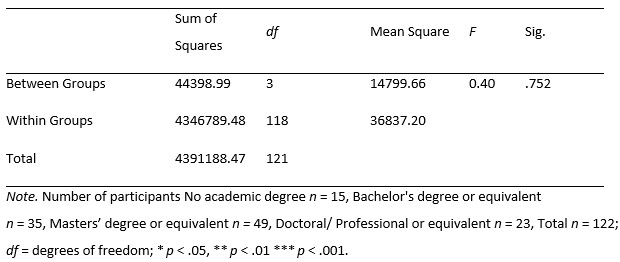

For Hypothesis 4, it is assumed that the education level has no significant influence on participants’ CT ability. To test H4, a one-way ANOVA was undertaken to examine whether there was a difference in pre-test scores as a function of education level. Education level was divided into 4 groups: No academic degree (n = 15, M = 1074.20, SD = 149.04), Bachelor’s degree or equivalent (n = 35, M = 1090.89, SD = 205.85), Masters’ degree or equivalent (n = 49, M = 1115.65. SD = 184.66), and Doctoral/ Professional or equivalent (n = 23, M = 1068.26, SD = 208.70). The group with no academic degree contained two outliers, which we did not remove from the sample [45]. Pre-test scores did not differ statistically significantly for the different education levels, F(3, 121) = .40, p = .752, η² = .01. Results are shown in Table 2. Thus, H4 can be accepted.

Table 2; Results of One Way ANOVA for differences between Education Level in pre-test score.

Hypothesis 5assumed that military affiliation impacts CT ability and that military individuals achieve a better pre-test score than civilian individuals. A t-test for independent samples was calculated to examine group differences as the prerequisites for the calculations were met.

The results indicate there is no significant difference in average CT ability between active military personnel (M = 1065.98, SD = 175.04) and civilians (M = 1111.19, SD = 198.18), t(120) = –1.27, p = .207. Thus, H5 can be rejected.

Discussion

The Evaluation of the Critical Thinking Course

Regarding the first objective of this work, participants in the intervention group increased their CT ability between the pre- and post-test. These results align with the findings of Abrami et al. (2008). However, an improvement in the score in the control group was also evident. Therefore, participating in the CT assessment alone may have improved average assessment scores. However, there is a small interaction effect, as a trend indicated (Fig 1), so a larger sample of the respective groups could yield a significant interaction effect.

Several potential factors can explain the lack of a significant difference between the intervention and control group regarding the CT post-test scores. First, it could have been that individuals from the intervention group did not carefully follow and process the lecture and the associated tasks and were only concerned with giving answers so that they would receive the certificate at the end of the course. This assumption is also reinforced based on glimpses of the answers given. This focus on obtaining the certificate rather than improving CT skills may have led to a loss of motivation and, thus, a lower post-test score.[46] On the other hand, the learning behaviour of the control group between the two measurement points could not be controlled. Maybe the first feedback also motivated the control group participants to increase their CT ability, so they learned to construct inherent subjects themselves.

Several potential factors can explain the lack of a significant difference between the intervention and control group regarding the CT post-test scores.

Further, the pre-test alone may have had an “intervention effect.” Thus, there might have been a triggering of metacognitive factors such as information management strategies, monitoring, and debugging strategies in the absence of knowledge of answers, thus resulting in an impulse to CT.

In addition, there may have been a repetition effect between the pre-test and post-test. Also, a Randomised Control Study (RCT) could minimise interpersonal group differences and provide more accurate results by decreasing the noise to capture the intervention’s effect more distinctly.

The results show that CT training facilitates the development of CT skills and that a course and even a test of CT skills may be more efficient than waiting for an individual to acquire CT skills via experience over time.

Influencing Factors on Critical Thinking Ability

Hypothesis H3 assumed that individuals with residency in North America would have better CT ability than individuals with residence in other continents because of the attention that CT is given in North America. The results of this study confirmed these findings. In addition to the attention that CT receives in North America, the results of this study may also indicate that American culture endorses a type of interaction that reinforces CT and allows for open exchange.[47]

Hypothesis H3 assumed that individuals with residency in North America would have better CT ability than individuals with residence in other continents because of the attention that CT is given in North America.

Further, based on the research findings of Abrami et al. (2018), it was hypothesised that the level of education does not affect CT ability (H4). Again, based on the results of this study, the findings are aligned with previous research. These results could show that the ambitions of teaching CT are pursued equally at all levels of education. Hypothesis H5 assumed that individuals with military affiliation would have better CT ability than civilians. However, the results showed no significant difference. The lack of difference could indicate that the military does not provide adequate training in CT[48] or because the topic also increases attention and is taught in the civilian world.[49]

Limitations and Outlook for Research

Participation in the research was voluntary and implied an existing interest in the subject of CT. Further, the intervention group was eligible to receive a certificate upon finishing all lessons and the assessments. This external motivation potentially caused individuals to focus only on the incentive and not adequately complete the course. In contrast, the control group could only receive their score information as an incentive. However, this offer was hardly taken up, so it can be assumed that strong intrinsic motivation was prevalent in the control group. Future research could use an alternative course with a different subject (e.g., a language) to incentivise the control group and partially control for intrinsic engagement with CT due to time expenditure with another topic.

Another point of criticism is the sample size. Hypothesis H3 assumed that individuals with residency in North America would have better CT ability than individuals with residence in other continents because of the attention that CT is given in North America. There is, therefore, a possibility that the testing conditions differed. The pre-tests and the post-tests were administered on different days and, in some cases, restarted at different course times, reducing the comparability between the groups.

Another point of criticism is the sample size. Hypothesis H3 assumed that individuals with residency in North America would have better CT ability than individuals with residence in other continents because of the attention that CT is given in North America.

Furthermore, there may have been misunderstandings regarding the questions. For example, several subjects were not native English speakers, which may have led to incorrect answers due to a lack of understanding. Despite the limitations, the study results are meaningful for a deeper understanding and future research on CT.

Practical Implications and Conclusion

This study indicates that a CT intervention can increase CT ability. However, it also suggests that a test alone improves CT ability and could, therefore, also function as an intervention. Even though there was no significant difference between the intervention and control group post-test scores, an interaction trend could be recognised in which the intervention group slightly differed from the control group after completing the CT course.

As NATO plans to train its personnel on this topic in the future, the findings of this work could be considered for future training projects. For example, a test can be conducted to prepare the participants and to set intrinsic incentives to deal with this topic. Further, no factors could be found except for residency in North America, which influences CT ability. Thus, it can be argued that every individual can improve their CT ability and should strive to do so. The fact that individuals from North America have a better CT ability than individuals from other continents may be related to the relevance of this topic on the continent. Thus, by increasing the awareness of CT, other militaries and societies, in general, could increase the CT abilities of their residents.

In conclusion, this work can provide a foundation for future teaching projects in both civilian and military life. CT is especially powerful in a situation of uncertainty and complexity and should be taught as early as possible to prepare for an increasingly complex and constantly changing world. The Swiss biologist and pioneer of developmental psychology Jean Piaget aptly described the need as early as 1952, and this quote shall conclude this work:

The principal goal of education in the schools should be creating men and women who are capable of doing new things, not simply repeating what other generations have done; men and women who are creative, inventive, and discoverers, who can be critical and verify, and not accept, everything they are offered.[50]

Fabio Ibrahim is a research associate officer at Helmut-Schmidt University in Hamburg, Germany. His research interests include military psychology, psychometrics and social network analysis. Mr Ibrahim works with specialised forces of the German Armed Forces and police in aptitude diagnostics and stress management. He is motivated to share scientific findings from psychology in the form of personnel development measures to increase the performance of individuals and organisations.

Arnel Ernst is an officer in the German Army and graduated with a Master of Science degree in psychology in 2022. His Master’s Thesis evaluated NATO’s Critical Thinking course. He shows great interest in military psychology and has been part of the Critical Thinking Working Group of the NATO Innovation Hub since 2021.

Dr Doris Zahner is the Chief Academic Officer at the Council for Aid to Education (CAE). In addition, Dr Zahner is an adjunct associate professor at Barnard College and Teachers College, Columbia University, and New York University. She holds a PhD in cognitive psychology and an MSc in applied statistics from Teachers College, Columbia University. Besides her professional and academic interests, Dr Zahner is an avid bird watcher and SCUBA diver.

Dr Yvonne R. Masakowski is a Research Fellow at the US Naval War College, Newport, RI. Her career spans 27 years as a distinguished scientist and Professor supporting the US Navy as a Human Factors Psychologist and Professor at the US Naval War College. Dr Masakowski also served as a consulting Principal Scientist with the NATO Innovation Hub and contributed to the development of NATO’s online Critical Thinking course.

Prof. Dr Philipp Yorck Herzberg is a professor of personality psychology and psychological assessment at the Helmut Schmidt University of the German Armed Forces in Hamburg. Prof. Herzberg´s primary research focus is personality in partnerships, personality prototypes, optimism, and personality and health. Besides his work as a university professor, he is involved in military projects in applied and operational psychology with military units such as the military police or Human Intelligence forces.

The views contained in this article are the authors’ alone.

[1] Steven Pinker, Rationality: What It Is, Why It Seems Scarce, Why It Matters, London: Allen Lane, an imprint of Penguin Books, 2021.

[2] Amy Shaw, Ou Lydia Liu, Lin Gu, Elena Kardonova, Igor Chirikov, Guirong Li, Shangfeng Hu, et al, “Thinking Critically about Critical Thinking: Validating the Russian HEIghten® Critical Thinking Assessment,” Studies in Higher Education 45, no. 9 (September 1, 2020): 1933–48, https://doi.org/10.1080/03075079.2019.1672640.

[3] Drik Van Damme and Doris Zahner, “Does Higher Education Teach Students to Think Critically?,” 2022, https://www.oecd-ilibrary.org/content/publication/cc9fa6aa-en.

[4] Sharon Bailin and Harvey Siegel, “Critical Thinking,” In The Blackwell Guide to the Philosophy of Education, edited by Nigel Blake, Paul Smeyers, Richard Smith, and Paul Standish, 181–93, Oxford, UK: Blackwell Publishing Ltd, 2007, https://doi.org/10.1002/9780470996294.ch11.

[5] Samuel Woolley and Philip N. Howard, eds. Computational Propaganda: Political Parties, Politicians, and Political Manipulation on Social Media, Oxford Studies in Digital Politics, New York, NY: Oxford University Press, 2019.

[6] Christopher P. Dwyer, Michael J. Hogan, and Ian Stewart, “An Evaluation of Argument Mapping as a Method of Enhancing Critical Thinking Performance in E-Learning Environments,” Metacognition and Learning 7, no. 3 (December 2012): 219–44, https://doi.org/10.1007/s11409-012-9092-1.

[7] B. Jean Mandernach, “Thinking Critically about Critical Thinking: Integrating Online Tools to Promote Critical Thinking,” InSight: A Journal of Scholarly Teaching 1 (August 1, 2006): 41–50, https://doi.org/10.46504/01200603ma.

[8] Diane F. Halpern, Thought and Knowledge: An Introduction to Critical Thinking, 5th Ed. Thought and Knowledge: An Introduction to Critical Thinking, 5th Ed. New York, NY, US: Psychology Press, 2014.

[9] Kelly Y. L. Ku and Irene T. Ho, “Metacognitive Strategies That Enhance Critical Thinking,” Metacognition and Learning 5, no. 3 (December 2010): 251–67, https://doi.org/10.1007/s11409-010-9060-6.

[10] Jennifer S. Clifford, Magdalen M. Boufal, and John E. Kurtz, “Personality Traits and Critical Thinking Skills in College Students: Empirical Tests of a Two-Factor Theory,” Assessment 11, no. 2 (June 2004): 169–76, https://doi.org/10.1177/1073191104263250.

[11] Michael C. Ashton, Kibeom Lee, Philip A. Vernon, and Kerry L. Jang, “Fluid Intelligence, Crystallized Intelligence, and the Openness/Intellect Factor,” Journal of Research in Personality 34, no. 2 (June 2000): 198–207, https://doi.org/10.1006/jrpe.1999.2276.

[12] Donald A. Schön, The Reflective Practitioner: How Professionals Think in Action, New York: Basic Books, 1983.

[13] Deanna Kuhn, and David Dean Jr., “Metacognition: A Bridge Between Cognitive Psychology and Educational Practice,” Theory Into Practice 43, no. 4 (November 2004): 268–73. https://doi.org/10.1207/s15430421tip4304_4.

[14] David R. Krathwohl, “A Revision of Bloom’s Taxonomy: An Overview,” Theory Into Practice 41, no. 4 (November 1, 2002): 212–18, https://doi.org/10.1207/s15430421tip4104_2.

[15] John H. Flavell, “Metacognitive Aspects of Problem Solving,” The Nature of Intelligence, 1976.

[16] Paul R. Pintrich, “The Role of Metacognitive Knowledge in Learning, Teaching, and Assessing,” Theory Into Practice 41, no. 4 (November 1, 2002): 219–25, https://doi.org/10.1207/s15430421tip4104_3.

[17] Carlo Magno, “Investigating the Effect of School Ability on Self-Efficacy, Learning Approaches, and Metacognition,” Online Submission 18, no. 2 (2009): 233–44.

[18] Shuangshuang Li, Xuezhu Ren, Karl Schweizer, Thomas M. Brinthaupt, and Tengfei Wang, “Executive Functions as Predictors of Critical Thinking: Behavioral and Neural Evidence,” Learning and Instruction 71 (February 2021): 101376, https://doi.org/10.1016/j.learninstruc.2020.101376.

[19] Adele Diamond, “Executive Functions,” Annual Review of Psychology 64, no. 1 (January 3, 2013): 135–68, https://doi.org/10.1146/annurev-psych-113011-143750.

[20] Philip David Zelazo, Clancy B Blair, and Michael T Willoughby, “Executive Function: Implications for Education. NCER 2017-2000,” National Center for Education Research, 2016.

[21] Peter Facione, NC Facione, and CA Giancarlo, “California Critical Thinking Disposition Inventory: Inventory Manual,” California Academic Press, Millbrae, CA. PA Facione and NC Facione. Talking Critical Thinking Change. Higher Learning 39, no. 2 (2001): 2007.

[22] Jorge Valenzuela, Ana Ma Nieto, and Carlos Saiz, “Critical Thinking Motivational Scale: A Contribution to the Study of Relationship between Critical Thinking and Motivation,” Electronic Journal of Research in Education Psychology 9, no. 24 (November 22, 2017): 823–48. https://doi.org/10.25115/ejrep.v9i24.1475.

[23] Peter Facione, N Facione, SW Blohm, and C Giancarlo, “California Critical Thinking Skills Test: Test Manual—2002 Revised Edition,” 2002.

[24] Peter Facione, “Critical Thinking: A Statement of Expert Consensus for Purposes of Educational Assessment and Instruction (The Delphi Report),” 1990.

[25] Yuan Li, Kun Li, Wenqi Wei, Jianyu Dong, Canfei Wang, Ying Fu, Jiaxin Li, and Xin Peng, “Critical Thinking, Emotional Intelligence and Conflict Management Styles of Medical Students: A Cross-Sectional Study,” Thinking Skills and Creativity 40 (June 2021): 100799, https://doi.org/10.1016/j.tsc.2021.100799.

[26] Christopher Paparone, “Two Faces of Critical Thinking for the Reflective Military Practitioner,” Military Review 94, no. 6 (2014): 104–10.

[27] Henry A. Leonard, J Michael Polich, Jeffrey D Peterson, Ronald E Sortor, and S Craig Moore, “Something Old, Something New: Army Leader Development in a Dynamic Environment,” RAND CORP SANTA MONICA CA, 2006.

[28] Susan C Fischer, V Alan Spiker, and Sharon L Riedel. “Critical Thinking Training for Army Officers. Volume 2: A Model of Critical Thinking.” ANACAPA SCIENCES INC SANTA BARBARA CA, 2009.

[29] Gary Klein, Michael McCloskey, Rebecca Pliske, and John Schmitt, “Decision Skills Training,” Proceedings of the Human Factors and Ergonomics Society Annual Meeting 41, no. 1 (October 1997): 182–85. https://doi.org/10.1177/107118139704100142.

[30] Karel Van Den Bosch, Anne S Helsdingen, and Marlus M De Beer, “Training Critical Thinking for Tactical Command,” TNO HUMAN FACTORS SOESTERBERG (NETHERLANDS), 2004.

[31] John W. Miller and Jennifer S. Tucker. “Addressing and Assessing Critical Thinking in Intercultural Contexts: Investigating the Distance Learning Outcomes of Military Leaders.” International Journal of Intercultural Relations 48 (September 2015): 120–36. https://doi.org/10.1016/j.ijintrel.2015.07.002.

[32] Mark H. Wiggins and Larry Dubeck, “Fort Leavenworth Ethics Symposium: Exploring The Professional Military Ethic,” Symposium Report. Fort Leavenworth, KS: CGSC Foundation Press, 2011.

[33] MK. Devine, “Professional Military Education for Navy Operational Leaders,” NAVAL WAR COLL NEWPORT RI JOINT MILITARY OPERATIONS DEPT, 2010.

[34] Jacqueline Eaton and Han de Nijs, The 11th NATO Operations Research & Analysis Conference Proceedings (2. October 2017): https://www.sto.nato.int/publications/STO%20Meeting%20Proceedings/STO-MP-SAS-OCS-ORA-2017/MP-SAS-OCS-ORA-2017-00-0-Conference%20-%20Booklet.pdf.

[35] Yvonne R. Masakowski, NATO HFM RTG 286 Final Report: NATO Leader Development for NATO Multinational Military Operations (August 2022), NATO Science and Technology Organization (STO), Paris, France.

[36] Philip C. Abrami, Robert M. Bernard, Evgueni Borokhovski, Anne Wade, Michael A. Surkes, Rana Tamim, and Dai Zhang, “Instructional Interventions Affecting Critical Thinking Skills and Dispositions: A Stage 1 Meta-Analysis,” Review of Educational Research 78, no. 4 (December 2008): 1102–34. https://doi.org/10.3102/0034654308326084.

[37] Jane S. Halonen, “Demystifying Critical Thinking,” Teaching of Psychology 22, no. 1 (February 1995): 75–81, https://doi.org/10.1207/s15328023top2201_23.

[38] Deanna Kuhn, The Skills of Argument, 1st ed. Cambridge University Press, 1991, https://doi.org/10.1017/CBO9780511571350.

[39] Diane F. Halpern, “Teaching Critical Thinking for Transfer across Domains: Disposition, Skills, Structure Training, and Metacognitive Monitoring,” American Psychologist 53, no. 4 (1998): 449–55. https://doi.org/10.1037/0003-066X.53.4.449.

[40] Kal Alston, “BEGGING THE QUESTION: IS CRITICAL THINKING BIASED?,” Educational Theory 45, no. 2 (June 1995): 225–33, https://doi.org/10.1111/j.1741-5446.1995.00225.x.

[41] John E. McPeck, “Critical Thinking and Subject Specificity: A Reply to Ennis,” Educational Researcher 19, no. 4 (May 1990): 10–12, https://doi.org/10.3102/0013189X019004010.

[42] Deanna Kuhn, “Critical Thinking as Discourse,” Human Development 62, no. 3 (2019): 146–64, https://doi.org/10.1159/000500171; The participants were asked if they had their residency in North America or another continent. So the item was operationalized dichotomous.

[43] Mica R. Endsley, Robert Hoffman, David Kaber, and Emilie Roth, “Cognitive Engineering and Decision Making: An Overview and Future Course,” Journal of Cognitive Engineering and Decision Making 1, no. 1 (March 2007): 1–21, https://doi.org/10.1177/155534340700100101.

[44] Raafat George Saadé, Danielle Morin, and Jennifer D.E. Thomas, “Critical Thinking in E-Learning Environments,” Computers in Human Behavior 28, no. 5 (September 2012): 1608–17. https://doi.org/10.1016/j.chb.2012.03.025.

[45] Andy Field, Discovering Statistics Using IBM SPSS Statistics, 5th edition, Thousand Oaks, CA: SAGE Publications, 2017.

[46] Ou Lydia Liu, Lois Frankel, and Katrina Crotts Roohr, “Assessing Critical Thinking in Higher Education: Current State and Directions for Next-Generation Assessment: Assessing Critical Thinking in Higher Education,” ETS Research Report Series 2014, no. 1 (June 2014): 1–23. https://doi.org/10.1002/ets2.12009.

[47] Yvonne R. Masakowski, Cognitive Bias and Decision-Making, NATO Innovation Hub, Norfolk, VA. March 07, 2022.

[48] Stephen J. Gerras, “Thinking Critically about Critical Thinking: A Fundamental Guide for Strategic Leaders,” Carlisle, Pennsylvania: US Army War College 9 (2008).

[49] Kevin L. Flores, Gina S. Matkin, Mark E. Burbach, Courtney E. Quinn, and Heath Harding, “Deficient Critical Thinking Skills among College Graduates: Implications for Leadership,” Educational Philosophy and Theory 44, no. 2 (January 2012): 212–30, https://doi.org/10.1111/j.1469-5812.2010.00672.x.

[50] Jean Piaget, The Origins of Intelligence in Children, Translated by Margaret Cook, New York: W W Norton & Co, 1952. https://doi.org/10.1037/11494-000 .